Design

It’s time to start designing for transparency

Sheryl Cababa

In the 1830s, tabloid publisher Benjamin Day lowered the price of his paper to a single penny. It was a dramatic five cent drop for the customer, paid for by advertisements that appeared on the pages of the New York Sun for the first time. This new model meant that the readers of the Sun were no longer the customers, but in fact the product itself. If this sounds familiar, it’s because we are wrestling with the weight of this tradeoff today in the context of social media. What does it mean when the platforms we use to keep up with college friends and cat videos use us back? And how is it that barely anyone truly understands how their data and their web presence is being used?

News that Cambridge Analytica exploited the data of 50 million Facebook users without their awareness is the latest and most shocking example of our data used in damaging ways. But it is by no means singular. There has been a steady drumbeat of stories that reveal the hidden cost of “free” platforms. Uber tracks the data in such detail that it knows people will pay surge pricing if their phone battery is running low. Fitness app Strava inadvertently revealed sensitive military locations by making a marketing campaign from running maps. Just as I was writing this, Under Armour revealed a data breach affected 150 million users of the MyFitnessPal app. Taken together, all have a common lack of transparency around 1) what these organizations know about each and every one of their users, and 2) how much of this knowledge these organizations actually share with their users. In this opaque and secretive system, users are left vulnerable and disempowered to protect their own data.

I’m not arguing against social media platforms, and it’s safe to say they are here to stay. After all, the model has existed since tabloids cost a penny. So where do we go from here? This is my challenge to all designers: It’s time to start designing for transparency rather than delight. Trust is the most important thing that any organization can earn from individuals, and the best way to earn that trust is by being transparent. Let’s use our role as user advocates to help organizations take responsibility by rethinking the ways in which they communicate who, what, where and how data is being used.

To begin, I propose the following five principles for designing for transparency.

1. Explain what data you collect, why you collect it, and how it makes you money.

Online clothing retailer Everlane is a company who has managed to represent — in a simple way — to its customers how it makes money from its products through a transparent pricing structure. We can draw inspiration from this to push beyond the simple understanding of “Your data is used to advertise.” Tech companies collect and scrape enormous amounts of data: location, search results, purchase history, and even text and SMS. We need to be ready to ask ourselves: do we actually need access to a user’s location information? For example, ridesharing services work just fine without your location data, but they present language to customers to make it seem like location services is a requirement in order for the app to work. Data disclosures should be as detailed as the data itself, and we need to stop hiding this information through opaque practices or omission. Make it clear to your user in specific terms. If you can’t explain it or don’t want to, then you probably shouldn’t be doing it.

2. Allow your design to explain your algorithms.

The podcast ‘Reply All’ recently ran an episode entitled ‘Is Facebook Spying On You?’ in which people presented anecdotal evidence about talking about random products and then immediately seeing it as an ad in their Facebook newsfeed, causing them to wonder if the microphone in their phone was being used to listen in on their buying decisions. In short, the Reply All hosts concluded that coincidences in newsfeed ads had more to do with the ways Facebook tracks your movement all over the web through an analytics tool called Facebook Pixel. But the suspicion lingers because there is a lack of understanding for what you see in your feed and why. To address this and build trust, we should design space for people to understand “Why am I seeing this ad?” Advertisers, after all, are able to target everyone from ‘new parents’ to people who like both KitKats and Nike shoes. This goes beyond advertisements as well. For any kind of feed, people should have transparency as to why the algorithm surfaces what they are seeing and why. Give options that will reveal these demographics to your end users, and allow them to weigh in and set options on what the algorithm provides them. Some companies are starting to respond to customers’ algorithm frustration. Pinterest just released a feature in which you can view only posts from people you follow (no “recommendations”!) and in chronological order as well. Hopefully more companies follow suit in empowering their customers to take control of their feed.

3. Allow your customers to opt into data collection, rather than opting out of it.

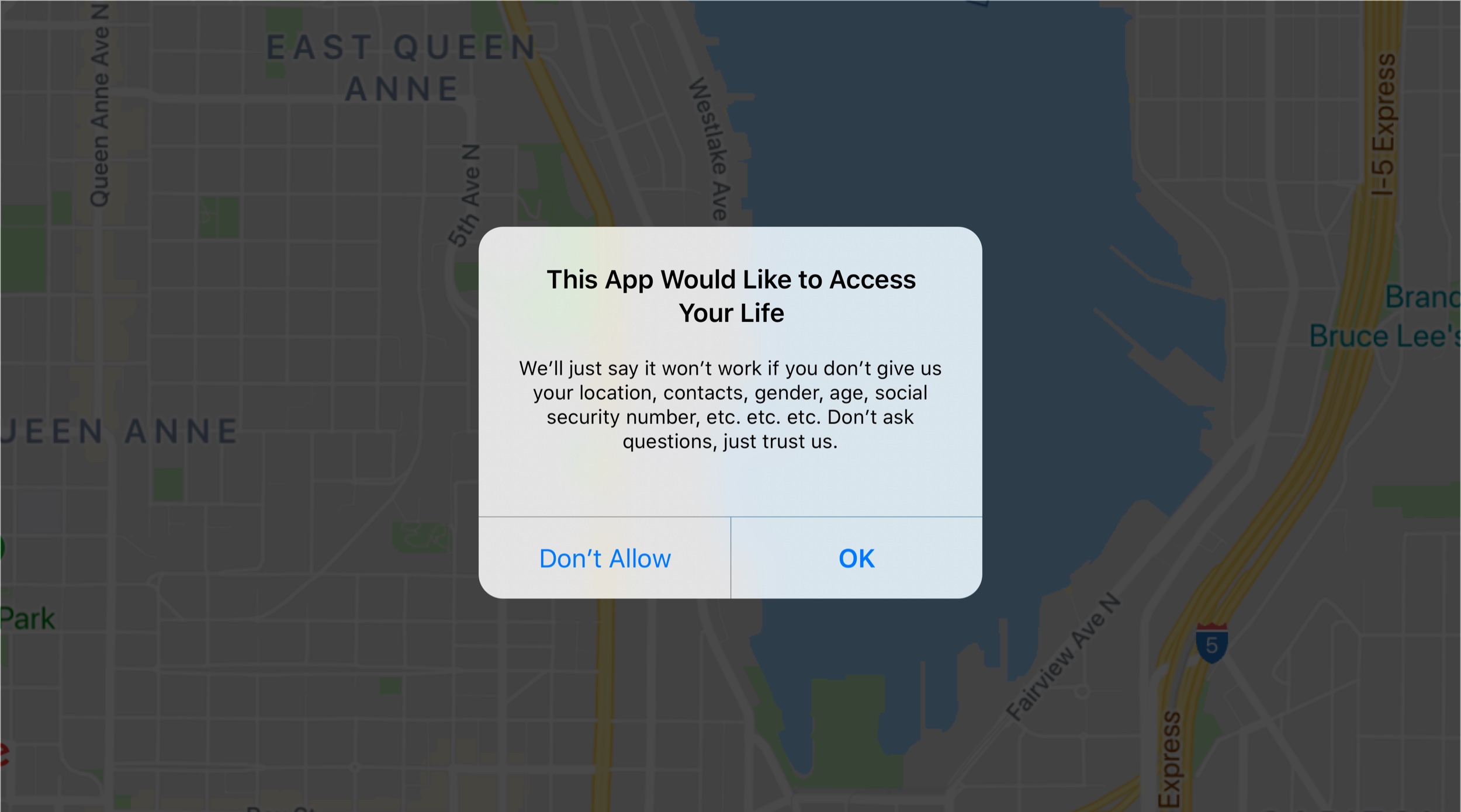

There’s nothing more powerful than the default. Have you ever downloaded a new app, and have seen the notification of all the things it wants access to—like all your contacts? Did it give you pause, but you went ahead and tapped “Allow” anyway?

Uber abused this in the most egregious way, when, in 2016, its default setting was quietly changed to tracking its users all the time, rather than just when they were using the app. Users were outraged by the change, and Uber had to walk it back less than a year later. Designers need to insist on transparency about users’ privacy settings, and solutions that allow users to opt in, rather than opting out of data collection. This forces our organizations to justify the need for data rather than just collecting it because they can. Zeynep Tufekci, a professor of Information Science at University of North Carolina, notes: “As long as the default is tracking, and as long as the burden is on the user, we’re not going to get anywhere.”

4. Examine and consider how bad actors could use your system. Then prevent it, prepare for it, and be ready to react in real time if it happens.

Designers often seek to change, update or tweak features with the best of intentions and inadvertently expose users to risk, exploitation or abuse because not enough time and resources have been invested in understanding the possible impact of change. In 2017, Twitter quietly removed notifications to users that they’ve been added to lists. After worried users raised issues — I want to know, as a woman, if I’m added to a misogynist troll’s hate-filled list of women they hate — Twitter’s safety team backtracked after only two hours. It’s a reminder that designers need to consider how the worst person you can think of might use not just your platform, but your features, and that includes your privacy settings, notifications (or lack-thereof), and, in a last example, rolled-up location tracking. This is why creating transparency not just about what you’re collecting, but how you will eventually use that data is so important.

5. Media literacy matters. It’s time we prioritized data literacy.

Media literacy matters because it enables people to discern reputable information, seek out sources and make wise decisions about the content they consume. We should apply that same logic and advocate for data literacy, an active form of transparency that educates people on the full truth of data collection so that they can be empowered to make proactive decisions about the information they share. If we implemented the principles outlined above, the result would be a drastic increase in communicating what data is being collected and why, as well as increased control of what people can opt into and how they can manage the algorithms that shape their experiences. Designing for data literacy would represent a significant shift in how data is conveyed today, moving us away from an opaque understanding of the most basic “free platform in exchange for data” transaction that has left so many users feeling distrustful, confused and surprised by the actions of social media platforms.

Sunlight is the best disinfectant

After trusting enormous amounts of their personal information, it’s easy to understand why social media users are starting to question if the deal was worth it when so many services have managed to abuse and break that trust in exchange for revenue. Unless there are changes to the way we communicate data collection, algorithm decisions and advertising models, trust will continue to erode. And that’s where designers can use their skills to help take responsibility for how we are collecting and using people’s data and help restore trust. If what you are doing gives you pause as a customer, you should respond to that feeling. We need to reaffirm our position as user advocates, which has always been the key to being a good designer in technology, to design for transparency and data literacy just as often we design for joy, delight, and engagement.

In the anti-corruption world, there’s a saying that sunlight is the best disinfectant. Let’s let go of the opaque practices that harm our users and add sunlight to our design work.